06. Optional: Deploying your Inference Project

Optional Deployment

Now that you have trained your network, ready to see it in action? Great - let’s get started!

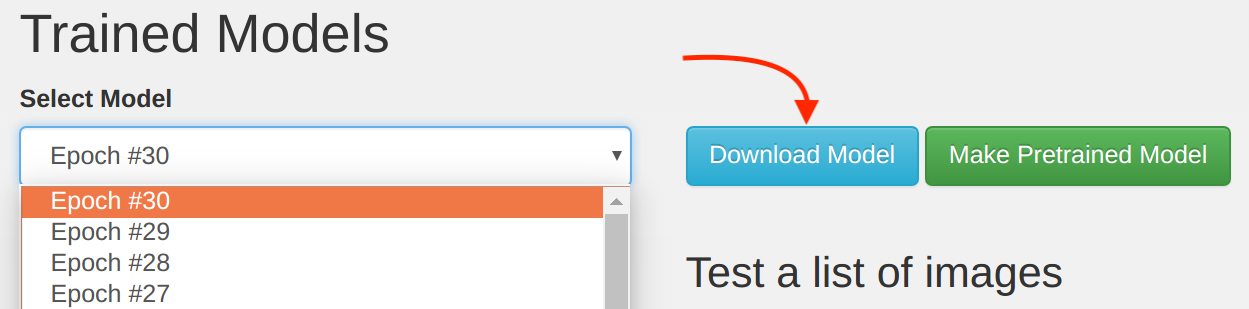

The first step is to download your model from DIGITS to your Jetson. To do this, navigate in a browser on the Jetson device to your DIGITS server. From there download your model, like so:

Next, create a folder on the system with a name for your model and extract the contents of your downloaded file into that folder with the tar -xzvf command.

Then, create an environment variable called NET to the location of the model path. Do this by entering something like export NET=/home/user/Desktop/my_model into the terminal. The exact command will depend on the shell you are using.

Navigate to the Jetson inference folder then into the executable binaries and launch imagenet or detect net like so:

./imagenet-camera --prototxt=$NET/deploy.prototxt --model=$NET/your_model_name.caffemodel --labels=$NET/labels.txt --input_blob=data --output_blob=softmax

You will then observe real time results from your Jetson camera!

If you desire to actuate based on the information the classifier is providing, you can edit either the imagenet or detectnet c++ file accordingly!

Here is an example of calling a servo action based on a classification result. It can be inserted into the code here at line 168:

std::string class_str(net->GetClassDesc(img_class));

if("Bottle" == class_str){

cout << "Bottle" << endl;

// Invoke servo action

}

else if("Candy_Box" == class_str){

cout << "Candy_Box" << endl;

// Do not invoke servo action

}

else {

// Catch anything else here

}